[bibshow file=library.bib key_format=cite]

[wpcol_1half id="" class="" style=""]

[/wpcol_1half]

[wpcol_1half_end id="" class="" style=""]

Background

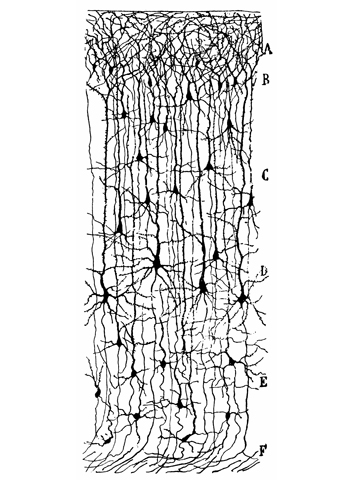

The brain is a fascinating device, that remains to be understood. Made of approximatly  neurons, each of them connected to about 10000 targets, it is thus a complex and unique network with more than

neurons, each of them connected to about 10000 targets, it is thus a complex and unique network with more than  connections interacting with each others in an efficient and robust manner. We all have the same organization at a macroscopic scale, the same generic cotical areas: sensory inputs coming from our senses are wired in a reproducible manner at a large scale, but the fine details of those connections, that are making each of us unique, are still unknown.

connections interacting with each others in an efficient and robust manner. We all have the same organization at a macroscopic scale, the same generic cotical areas: sensory inputs coming from our senses are wired in a reproducible manner at a large scale, but the fine details of those connections, that are making each of us unique, are still unknown.

Understanding how the primary sensory areas of the neocortex are structured in order to process sensory inputs is a crucial step in analysing the mechanisms underlying the functional role, from an algorithmic point of view, of cerebral activity. This understanding of the sensory dynamics, at a large scale level, implies using simplified models of neurons, such as the "integrate-and-fire models", and a particular framework, the "balanced" random network, which allows the recreation of dynamical regimes of conductances close to those observed in vivo, in which neurons spike at low rates and with an irregular discharge.

[/wpcol_1half_end]

[wpcol_1half id="" class="" style=""]

The Neuron

Neurons are, with glia cells, the fundamental processing units of the brain. Because of their fast time constants, they are considered as reponsible for most of the information processing, while the latter are thought to be involved in much slower mechanisms such as plasticity or metabolism. Therefore, as a (pretty strong) first assumption, they will be ignored in the following.

Neurons are basically acting as spatio-temporal integrators, exchanging binary information through action potentials: they are receiving inputs from pre-synaptic cells, and when "enough" are received, either temporally or spatially, summed with complex non-linear interaction depending on their morphologies, they emit an action potential and send it to their targets. To picture it, imagine a bath tube, with a hole at the bottom, and thus leeking water. Incoming action potentials are like buckets of water that will be poured in time into the bath tube. If enough are incoming during a short period of time (and thus compensating for the leak), water will overflow. The neuron is emitting an action potential, and its voltage  (equivalent here to the water level) will be reset to a default value. This is the principe of the "integrate and fire neurons". Of course, this model is a crude simplication of all the biological processes that are taking place in a real neurons. It is discarding all the non-linearities and the complex operations that may be achieved in the dendrites. However, because of its tractability, either from a computational or an analytical point of view, it is widely used in computational neuroscience.

(equivalent here to the water level) will be reset to a default value. This is the principe of the "integrate and fire neurons". Of course, this model is a crude simplication of all the biological processes that are taking place in a real neurons. It is discarding all the non-linearities and the complex operations that may be achieved in the dendrites. However, because of its tractability, either from a computational or an analytical point of view, it is widely used in computational neuroscience.

[/wpcol_1half]

[wpcol_1half_end id="" class="" style=""]

[/wpcol_1half_end]

[wpcol_1half id="" class="" style=""]

[/wpcol_1half]

[wpcol_1half_end id="" class="" style=""]

The Integrate-and-fire model

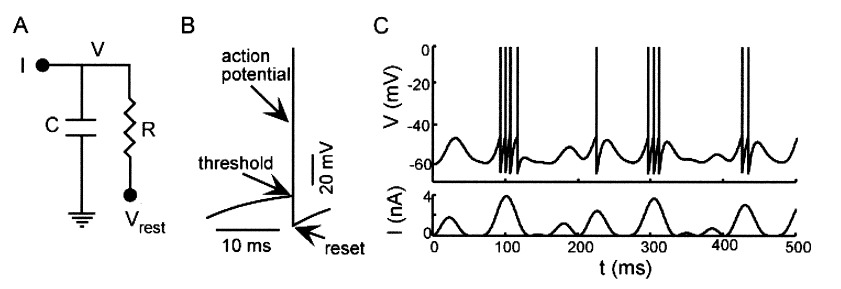

From a more mathematical point of view, inputs to the neurons are described as ionic currents flowing through the cell membrane when neurotransmitters are released. Their sum is seen as a physical time-dependent current  and the membrane is described as an

and the membrane is described as an  circuit, charged by

circuit, charged by  (see Figure taken from [bibcite key=Abbott1999]). When the membrane potential

(see Figure taken from [bibcite key=Abbott1999]). When the membrane potential  reaches a threshold value

reaches a threshold value  , a spike is emitted and the membrane potential is reset. In its basic form, the equation of the integrate and fire model is:

, a spike is emitted and the membrane potential is reset. In its basic form, the equation of the integrate and fire model is:

\begin{equation}

\tau_{\mathrm{m}} \frac{dV_{\mathrm{m}}(t)}{dt} = -V_{\mathrm{m}}(t) + RI(t)

\end{equation}

where  is the resistance of the membrane, with

is the resistance of the membrane, with  .

.

[/wpcol_1half_end]

To refine and be more precise, the neuronal input approximated as a fluctuating current  but synaptic drives are better modelled by fluctuating conductances: the amplitudes of the post synaptic potentials (PSP) evoked by neurotransmitter release from pre-synaptic neuron depend on the post-synaptic depolarization level. A lot of study focuses now on this integrate-and-fire model with conductance-based synapses [bibcite key=Destexhe2001,Tiesinga2000,Cessac2008,Vogels2005]. The equation of the membrane potential dynamic is then:

but synaptic drives are better modelled by fluctuating conductances: the amplitudes of the post synaptic potentials (PSP) evoked by neurotransmitter release from pre-synaptic neuron depend on the post-synaptic depolarization level. A lot of study focuses now on this integrate-and-fire model with conductance-based synapses [bibcite key=Destexhe2001,Tiesinga2000,Cessac2008,Vogels2005]. The equation of the membrane potential dynamic is then:

\begin{equation}

\tau_{\mathrm{m}} \frac{dV_{\mathrm{m}}(t)}{dt} = (V_{\mathrm{rest}}-V_{\mathrm{m}}(t)) + g_{\mathrm{exc}}(t)(E_{\mathrm{exc}}-V_{\mathrm{m}}(t)) + g_{\mathrm{inh}}(t)(E_{\mathrm{inh}}-V_{\mathrm{m}}(t))

\end{equation}

When  reaches the spiking threshold

reaches the spiking threshold  , a spike is generated and the membrane potential is held at the resting potential for a refractory period of duration

, a spike is generated and the membrane potential is held at the resting potential for a refractory period of duration  . Synaptic connections are modelled as conductance changes: when a spike is emitted

. Synaptic connections are modelled as conductance changes: when a spike is emitted  followed by exponential decay with time constants

followed by exponential decay with time constants  and

and  for excitatory and inhibitory post-synaptic potentials, respectively. The shape of the PSP may not be exponential. Other shapes for the PSP can be used, such as alpha synapses

for excitatory and inhibitory post-synaptic potentials, respectively. The shape of the PSP may not be exponential. Other shapes for the PSP can be used, such as alpha synapses  , or double shaped exponentials synapses

, or double shaped exponentials synapses  .

.  and

and  are the reversal potentials for excitation and inhibition.

are the reversal potentials for excitation and inhibition.

[wpcol_1half id="" class="" style=""]

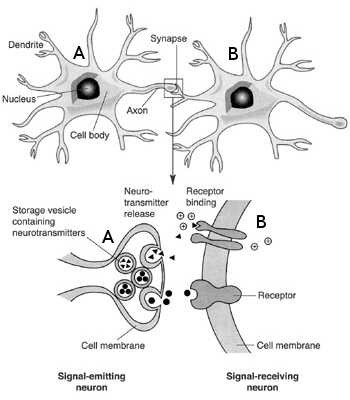

The chemical synapse

The synapse is a key element where the axon of a pre-synaptic neuron  connects with the dendritic arbour of a post-synaptic neuron

connects with the dendritic arbour of a post-synaptic neuron  . It transmit the electrical influx emitted by neuron

. It transmit the electrical influx emitted by neuron  to

to  . Synapses are crucial in shaping a network's structure, and their ability to modify their efficacy according to the activity of the pre and the post-synaptic neuron is at the origin of synaptic plasticity and memory retention in neuronal networks.

. Synapses are crucial in shaping a network's structure, and their ability to modify their efficacy according to the activity of the pre and the post-synaptic neuron is at the origin of synaptic plasticity and memory retention in neuronal networks.

Synapses can be either chemical or electrical, but again, for a more exhaustive description,the latter here will be discarded. To focus only on the chemical synapses, the pre-synaptic neuron  releases a neurotransmitter into the synaptic cleft which then binds to receptors located on the surface of the post-synaptic neuron

releases a neurotransmitter into the synaptic cleft which then binds to receptors located on the surface of the post-synaptic neuron  , embedded in the plasma membrane. These neurotransmitters are stored in vesicles, regenerated continuously, but a too strong stimulation of the synapse may lead to a temporary lack of neurotransmitter, or to a saturation of the post-synaptic receptors on

, embedded in the plasma membrane. These neurotransmitters are stored in vesicles, regenerated continuously, but a too strong stimulation of the synapse may lead to a temporary lack of neurotransmitter, or to a saturation of the post-synaptic receptors on  . This short-term plasticity phenomenon is called synaptic adaptation.

. This short-term plasticity phenomenon is called synaptic adaptation.

The type of neurotransmitter which is received to the post-synaptic neuron influences its activity. The synaptic current is cancelled for a given inversion potential: if this inversion potential is below  (the voltage threshold for triggering an action potential), the net synaptic effect inhibits the neuron, and if it is below, it excits the cell. Classical neurotransmitter such as glutamate leads to a depolarization (i.e. an increase of the membrane potential), and the synapse is said to be excitatory. In contrast, gamma-aminobutyric acid (GABA) leads to an hyper-polarization (a decrease of the membrane potential), and the synapse is said to be inhibitory. In general, a given neuron produces only one type of neurotransmitter, being either only excitatory or only inhibitory. This principle is known as the Dale's principle, and is a common assumption made in the models of neuronal networks.

(the voltage threshold for triggering an action potential), the net synaptic effect inhibits the neuron, and if it is below, it excits the cell. Classical neurotransmitter such as glutamate leads to a depolarization (i.e. an increase of the membrane potential), and the synapse is said to be excitatory. In contrast, gamma-aminobutyric acid (GABA) leads to an hyper-polarization (a decrease of the membrane potential), and the synapse is said to be inhibitory. In general, a given neuron produces only one type of neurotransmitter, being either only excitatory or only inhibitory. This principle is known as the Dale's principle, and is a common assumption made in the models of neuronal networks.

[/wpcol_1half]

[wpcol_1half_end id="" class="" style=""]

[/wpcol_1half_end]